Tish Shute of Ugotrade generously invited me to present at Augmented Reality Event 2011 yesterday in a session on augmented reality user experience. My time slot was relatively short, and she challenged me to talk outside of the usual topics, so I chose to talk about something that's been interesting me for a long time: the use of non-visual senses for communicating information about the information shadows around us. In the process, I humbly decided to rename "augmented reality" (because I'm somewhat obsessed with terminology). My suggested replacement term is somatic data perception. Also, as an intro to my argument, I decided to do a back of the envelope calculation for the bandwidth of foveal vision, which turns out to be pretty low.

Here is the Slideshare version:

Scribd, with note:

Somatic Data Perception: Sensing Information Shadows

You can download the PDF(530K).

Here's the transcript:

Good afternoon!

First, let me tell you a bit about myself. I'm a user experience designer and entrepreneur. I was one of the first professional Web designers in 1993. Since then I've worked on the user experience design of hundreds of web sites. I also consult on the design of digital consumer products, and I've helped a number of consumer electronics and appliance manufacturers create better user experiences and more user centered design cultures.

In 2003 I wrote a how-to book of user research methods for technology design. It has proven to be somewhat popular, as such books go.

Around the same time as I was writing that book, I co-founded a design and consulting company called Adaptive Path.

I wanted to get more hands-on with technology development, so I founded ThingM with Tod E. Kurt about five years ago.

We're a micro-OEM. We design and manufactures a range of smart LEDs for architects, industrial designers and hackers. We also make prototypes of finished objects that use cutting edge technology, such as our RFID wine rack.

I have a new startup called Crowdlight.

[Roughly speaking, since we filed our IP, Crowdlight is a lightweight hardware networking technology that divides a space into small sub-networks. This can be used in AR to provide precise location information for registering location-based data onto the world, but it's also useful in many other ways for layering information in precise ways onto the world. We think it's particularly appropriate for The Internet of Things, for entertainment for lots of people, and for infusing information shadows into the world.]

This talk is based on a chapter from my new book. It's called "Smart Things" and it came out it September. In the book, I describe an approach for designing digital devices that combine software, hardware, physical and virtual components.

Augmented reality has a name problem. It sets the bar very high and implies that you need to fundamentally alter reality or you're not doing your job.

This in turn implies that you have to capture as much reality as possible, that you have immerse people as much as possible.

This leads naturally to try to take over vision, since it's how we most perceive the world around us. If we were bats, we would have started with hearing, if we were dogs, smell, but we're humans, so for us reality is vision.

The problem is that vision is a pretty low bandwidth sense. Yes. It's possibly the highest bandwidth sense we have, but it's still low bandwidth.

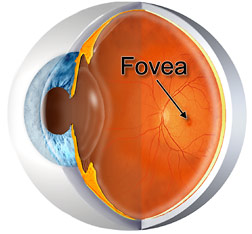

This morning I decided to do a back of the envelope estimate of how much bandwidth we have in our vision. This is a back of the envelope estimate by a non-scientist, so excuse it if it's way off. Anyway, I started with the fovea, which typically has between 30,000 and 200,000 cones in it.

To compensate, our eyes move in saccades which last between 20ms and 200ms, or 5 to 50 times per second.

So this leads to a back of the envelope calculation of eye bandwidth between 100 bits per second and 10K bits per second

That's around 4 orders of magnitude slower than a modern front-side bus.

The brain deals with this through a series of ingenious filters and adaptations to create the illusion of an experience of all reality, but at the core there's only a limited amount of bandwidth available and our visual senses are easily overwhelmed.

In the late 70s and early 80s a number of prominent cognitive scientists measured all of this and showed that, roughly speaking, you can perceive and act on about four things per second. That's four things period. Not four novel things that just appeared in your vision system--that takes much longer--or the output of four new apps that you just downloaded. It's four things, total.

This is a long digression to my main point, which is that augmented reality is the experience of contextually appropriate data in the environment. And that experience not only can, but MUST, use every sense available.

Six years ago I proposed using actual heat to display data heat maps. This is a sketch from my blog at the time I wrote about it. The basic idea is to use a peltier junction in an arm band to create a peripheral sense of data as you move through the world. So that you can have it hooked up to Wifi signal strength, or housing prices, or crime rate, or Craig's List apartment listings, and as you move through the world, you can feel if you're getting warmer to what you're looking for because you arm actually gets warmer. This allows you to use your natural sense filters to determine whether it's important. If it's hot, it will naturalliy pop into your consciousness, but it'll only be there otherwise if you want it, and you can check in while doing something else, just as when you're gauging which direction the wind is going by which side of your face is cold, and you're not adding additional information to your already overstuffed primary sense channels.

If AR is the experience of any kind of data by any sense then we have the options to associate secondary data with secondary senses to create hierarchies of information that match our cognitive abilities.

For me, augmented reality is the extension of our senses into the realm of information shadows where physical objects have data representations that can be manipulated digitally as we manipulate objects physically. To me this goes further than putting a layer of information over the world, like a veil. It's about enhancing the direct experience of the world, not to replace it, and to do it in a way that's not about being completely in the background, like ambient data weather, or about taking over our attention.

So what I'm advocating for is a change in language away from "augmented reality" to something that's more representative of the whole experience of data in the environment. I'm calling it "Somatic Data Perception" and I close on a challenge to you. As you're designing, think about what IS secondary data and what are secondary, and how can the two be brought together?

Thank you.