This is Part 2 of a pre-print draft of a chapter from Smart Things: Ubiquitous Computing User Experience Design, my upcoming book. (Part 1) (Part 3) (Part 4) The final book will be different and this is no substitute for it, but it's a taste of what the book is about.

Citations to references can be found here.

Chapter 1: The Middle of Moore's Law

Part 2: The Middle of Moore's Law

To understand why ubiquitous computing is particularly relevant today, it's valuable to look closely at an unexpected corollary of Moore's Law. As new information processing technology gets more powerful, older technology gets cheaper without becoming any less powerful.

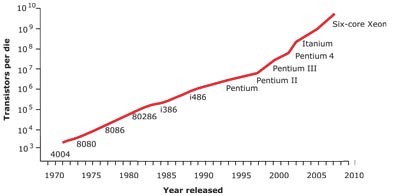

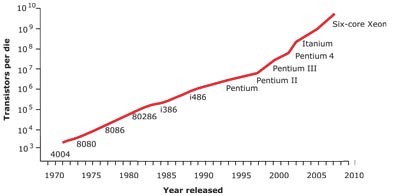

Figure 1-2. Moore's Law (Based on Moore, 2003)

First articulated by Intel Corporation founder Gordon Moore, today Moore's Law is usually paraphrased as a prediction that processor transistor densities will double every 15 months. This graph (Figure 1-2) is traditionally used to demonstrate how powerful the newest computers have become. As a visualization of the density of transistors that can be put on a single integrated circuit it represents semiconductor manufacturers' way of distilling a complex industry to a single trend. The graph also illustrates a growing industry's internal narrative of progress without revealing how that progress is going to happen.

Moore's insight was dubbed a "Law," like a law of nature, but it does not actually describe the physical properties of semiconductors. Instead, it describes the number of transistors Gordon Moore believed would have to be put on a CPU for a semiconductor manufacturer to maintain a healthy profit margin given the industry trends he had observed in the five years earlier. In other words, Moore's 1965 analysis, which is what the Law is based on, was not a utopian vision of the limits of technology. Instead, the paper (Moore, 1965) describes a pragmatic model of factors affecting profitability in semiconductor manufacturing. Moore's conclusion that, "by 1975 economics may dictate squeezing as many as 65,000 components on a single silicon chip" is a prediction about how to compete in the semiconductor market. It's more a business plan and a challenge to his colleagues than a scientific result.

Fortunately for Moore, his model fit the behavior of the semiconductor industry so well that it was adopted as an actual development strategy by most of the other companies in the industry. Intel, which he co-founded soon after writing that article, followed his projection almost as if they was a genuine law of nature, and prospered.

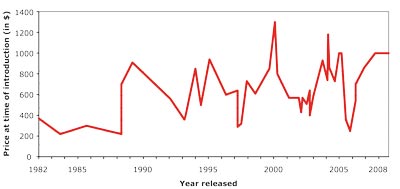

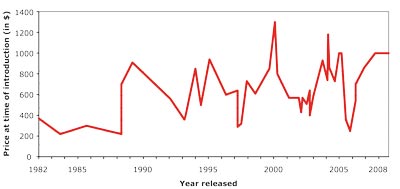

Figure 1-3. CPU Prices 1982–2009 (Data source: Ken Polsson, processortimeline.info)

The economics of this industry-wide strategic decision holds the key to ubiquitous computing's emergence today. During the Information Revolution of the 1980s, 1990s and 2000s, most attention was given to the upper right corner of Moore's graph, the corner that represents the greatest computer power. However, as processors became more powerful, the cost of older technology fell as a secondary effect.

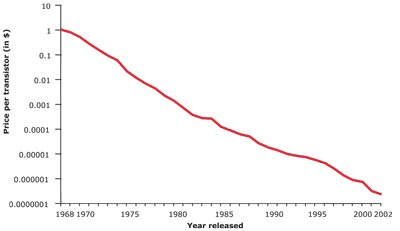

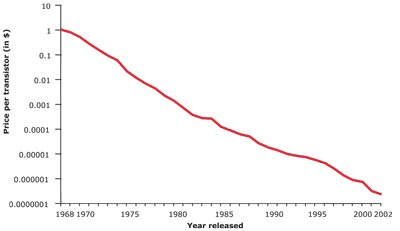

Figure 1-4. Per transistor cost of CPUs, 1968–2002 (Based on: Moore, 2003)

The result of power increasing exponentially as the price of new CPUs remains (fairly) stable (Figure 1-3) is that the cost of older technology drops at (roughly) the same rate as the power of new processors rises (Figure 1-4). Since new technology gets more powerful very quickly, that means that old technology drops in price just as quickly. However, although it may get cheaper, it does not loose any of its ability to process information. Thus, older information processing technology is still really powerful , but now it's (almost) dirt cheap.

[Footnote: This assertion is somewhat of an oversimplification. Semiconductor manufacturing is complex from both the manufacturing and pricing standpoints. For example, once Intel moved on to Pentium IIIs, it's not like there was a Pentium II-making machine sitting in the corner that could be fired up at a whim to make cheap Pentium IIs. What's broadly true, though, is that once Intel converted their chipmaking factories to Pentium III technology, they could still make the functional equivalent of Pentium IIs using it, and (for a variety of reasons) making those chips would be proportionally less expensive than making Pentium IIIs. In addition, these new Pentium II-equivalent chips would likely be physically smaller and use less power than their predecessors.]

Take the Intel i486, released in 1989. The i486 represents a turning point between the pre-Internet PC age of the 1980s and the Internet boom of the 1990s:

- It ran Microsoft Windows 3.0, the first commercially successful version of Windows, released in 1990.

- It was the dominant processor when the Mosaic browser catalyzed the Web boom in 1993. Most early Web users probably saw the Web for the first time on a 486 computer.

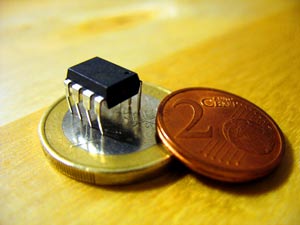

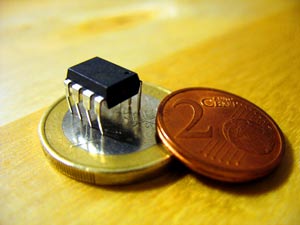

At the time of its release, it cost $1500 (in 2010 dollars) and could execute 16 million instructions per second (MIPS). If we look at 2010 CPUs that can execute 16 MIPS, we find processors like Atmel's ATTiny (Figure 1-5), which sells for about 50 cents in quantity. In other words, broadly speaking, the same amount of processing power that cost $1500 in 1989 now costs 50 cents and uses much less power and requires much less space.

Figure 1-5. ATTiny Microcontroller, which sells for about 50 cents and has roughly the same amount of computing power as an Intel i486, which initially sold for the equivalent of $1500 (Photo by Uwe Hermann, licensed under Creative Commons Attribution-Share Alike 2.0, found on Flickr)

This is a fundamental change in the price of computation--as fundamental a change as the change in the engineering of a steam boiler. In 1989, computation was expensive and was treated as such: computers were precious and people were lucky to own one. In 2010, it has become a commodity, cheaper than a ballpoint pen. Thus, in the forgotten middle of Moore's Law charts lies a key to the future of the design of the all the world's devices: ubiquitous computing.

Tomorrow: Chapter 1, Part 3

Recent Comments