March 10, 2005

Home furniture mutating

Found this article in an old IDSA newsletter. It talks about how furniture is changing to accommodate new usage patterns. Some excerpts:

Many of the furniture designs introduced here last week at the International Home Furnishings Market and that will be in stores in the fall are likewise intended for a harried, hassled nation -- in this case, people who eat on the run, hypertask, work all the time, relocate often, and are too busy to pick out furniture or to give much thought to their design style.[...]

One solution touted at High Point was the "lift top rectangular cocktail table" by Lane Home Furnishings. It looks like a regular table, but the top pulls up and toward you "so it's just about table height,"

[...]

Ottomans are getting higher -- some are at least 4 inches higher than they used to be -- the better to balance your laptop on your lap while you're sitting on the sofa. Likewise, reclining chairs are being reinvented for people who can't spare the time to simply recline.

[...]

"Now, you see your back and your foot rest operate separately," he said, making it possible to sit up straight while your legs are up and your computer's on your lap.

[...]

Since we're on our cell phones all the time anyway, why not literally be on our cell phone? This seems to be the message of the new Cell Phone Stash Chair by Lumisource, a plush chair stylized with a keypad. Fittingly, it multitasks by opening up for storage in the seat.

[...]

Where at one time consumers purchased furniture with the expectation that they would spend years, even decades, at the same address, today's manufacturers are designing for a population on the move.

[...]

As a result, a new sub-genre of home furnishings seems to have emerged, meant to suggest a kind of faux togetherness. Stanley Furniture's "Provincia Trilogy Partners Desk" would fit into this category: It's a desk for three people with two laptop stations.

On the one hand, it sounds like there's a somewhat cynical sneer to some of the pieces (or maybe just to the writer's coverage of them), but--critical design aside (what does it communicate to the user that their relaxation and work spaces have been explicitly merged?)--it's interesting to see furniture manufacturers shift their focus to design based on an understanding of the changing role of furniture. It's still pretty haphazard--the bemused tone of the article clearly shows that this is all new and wacky--but it's nice to see the industry more explicitly approaching design based on an analysis of user needs.

March 09, 2005

Open Source Precursor

Looking through my Dad's bookshelf, I started flipping through a book called Henry's Attic. It's a fun book describing stuff that's been donated to Greenfield Village and the Henry Ford Museum, in Dearborn. The museum is a tremendous collection of the history and culture of technology. You should go there if you're anywhere near Detroit.

One of the entries caught my eye. It was for the 1957 Liberty Mutual Insurance Company's "Survival Car I":

Among the innovations that the project spawned were the concept of "packaging" passengers for safety, simulating accidents to analyze how injuries occurred, and using dummies in auto-crash testing.[...]

The tanklike vehicle--basically a 1961 [sic] Chevrolet Bel Aire--incorporates some sixty-five safety features for preventing accidents or reducing injuries when accidents occur.

That's not surprising, but here's the kicker that relates this as a parallel to today's open source/closed source debates:

Although auto manufacturers thought the safety features on the survival cars would not sell, more than fifty of them are standard equipment on today's automobiles. Liberty Mutual [...] sought no patents on the research or designs developed for the survival cars. [emphasis mine--mk]

Further, the DOT's site has an interesting quote about these cars from one Ralph Nader:

"That an insurance company," Nader said, "had to produce the first prototype safety car itself constituted a stinging rebuke to the automobile makers." The auto industry was hostile to Survival Cars; Nader reported that the experimental Mustang (1963) included eight of the safety features, but all were dropped by the time the car went into production.

For me the primary lesson is that eventually investment in good user experiences pay off, and resisting things that make products easier, safer and with better functionality, then sharing the most valuable insights with everyone, will pay off for everyone. Liberty Mutual invested $250K, in 1960 dollars, into their program. I'm sure that their innovations have saved many times in excess of that for insurance industries (who don't have to pay out claims) and consumers. I bet even car companies made money off of making safer cars.

Another lesson is that it probably took car companies a couple of tries, an impetus from consumers and regulators, to figure out how to incorporate these features into their cars. So iterative development, patience and perseverance are also necessary to understand exactly how to incorporate these ideas. For example, I don't see many modern cars with six windshield wipers or accordion doors (some pictures of the thing).

March 03, 2005

John Udell's Google Maps Annotation

About a year and a half ago, I did a sketch of an idea I had about how a dynamically created travelogue could look like. It was nothing earth-shattering, but it was an interesting exercise.

John Udell has now taken this to the next level, using Google Maps and various video/photo clips to illustrate a walk he takes around his neighborhood (Flash). It's really nice and it shows what may be possible if this kind of personal data can get mushed together automatically. This said, I still don't get why there aren't more consumer-grade GPS-savvy cameras. Even if the battery life is bad, it still seems like there would be a significant-enough market for 'em.

Intimate computing, indeed

This ad from the 1918 Sears catalog is often cited by ubicomp people (including me, in my upcoming talk to the IA Summit) as an example of how electric motors stopped being special things and disappeared into our tools, with the point being that computation is likely to do the same. They refer to the sewing machine attachment when it's discussed. But one thing that I don't see many citations for is for the other interesting attachment in this ad. The one that's second from the bottom, the one right above the grinder. Yes, that's the one, the one for the vibrator. Sears was right, it really is an ad for "Aids that every woman appreciates."

(click through for the big ad--and, yes, I know there was probably some other justification for vibrators, but, really, I'm sure everyone knew what was up)

February 28, 2005

Analog is the new digital

As I showed in a recent blog post, I carry a paper notebook around. I've been doing it for years. From Genevieve, I learned to paste stuff into it--like business cards, notes on napkins, and clippings from the Economist. That gives me an artifact and context (the notes around it), rather than having the artifact in one place and the notes in another.

I also, of course, note the prevalence of index cards. They're all over agile programming techniques, geek task organization and the current personal productivity trend.

And then there's the reinterpretation of digital artifacts in meatspace (blinkenlights, Space Invader's digital tile graffiti, and the "Connect-Four" version of Tetris).

Anyway, this has led me to conclude (of course) that

Analog is the new digital.

What does that mean? Got me, but it's a slogan. ;-)

February 22, 2005

Warcraft and feedback

I've been playing a lot of World of Warcraft the last couple of weeks. Ben has been talking about it, and I have heard so much about it, that I felt it needed to be tried. It's been years since the last time I seriously played video games, so I was behind on all this MMOG stuff and wanted to see what was up.

To write a simple review: Warcraft is fantastic. The way that the experience is constructed is very clever and there are great little touches everywhere. The problem the designers face are similar to many other kinds of experience designs (whether for theme parks, web sites or functional consumer products): they have to keep people's attention, make it fun regardless how long someone's been playing, guide people deeper without alienating or boring them, and constrain the behavior of many hundreds of players while giving the perception of complete freedom.

It's a difficult task and they pull it off very well. I may be writing more about it as I force myself to tear away from the game, but suffice it to say I've been dreaming in Warcraft for about a week, and although that's a little disturbing, it's a testament to the power of the experience (or maybe I just have too much time on my hands these days, but I don't think that's it).

The observation for today is on feedback. One of the ways that Warcraft differs from everyday life, and one of the primary ways that it stays so addictive, is through continual quantitative feedback. There are a lot of different progress indicators, and one or another is always going up. There are many, many ways to get "better" and, unlike the real world, these are quantified and observable. Rather than the abstract notion that I'm getting smarter by reading books, or getting a little more fit by walking, I know immediately when I get better and by how much. This is powerful positive feedback and although it's certainly possible to go too far with obsessing about quantifiable achievement, it's one of the things that drives the interest, and continual use, of the game.

Why would someone--namely, me--spend hours killing and skinning virtual animals just to get his shooting, skinning and cooking skills up?

This lead me to thinking about smart devices and social effects. One role that smart devices, or personal technology in general, can play in people's lives is to quantify the normally unquantifiable achievements. This quantification can help us conceptualize numerically--and we love to boil things down to numbers when obsessing, don't we? (see: engine displacement in hotrods, MHz in overclocked CPUs, heart rate, frequent flier miles, dollars in the bank, etc.)--things that we can't imagine otherwise. I think that there's an immense potential in devices that help us understand how much we do x or y. Then the whole world becomes a little like Warcraft and we can take our goals--"Level 14" becomes "a bit closer to my 43Things goal"--and maybe make them happen in the small steps that Warcrafts shows us and real life doesn't.

[The screenshots are, in order: places Irving--my dwarf hunter--has explored in the land of Westfall (the UI exposes more map as Irving travels), Irving's reputation with various races and cities, and Irving's skills (you can see he's not much of a cook, but he's even worse a miner ;-).]

February 17, 2005

Liz's Site Specific Wireless Art Class

Liz posted an interview she did about the class she taught last fall at SFAI. I was flattered to have been invited to participate the final crit of one of the first art classes to talk about the digial radio spectrum as a medium, anywhere, and it was very interesting. She generalizes her experience to talking about what it takes to teach art in such a heavily technological medium. Go Liz!

January 01, 2005

podium + laptop = change in educational culture

A new school in the UK was furnished without teachers' desks or desktop computers. Instead, they get podiums and laptops:

She disapproved of the stooped stance at the teacher's desk, and the way that trailing wires seemed to snake in all directions. She didn't like the way a teachers' desk occupied valuable space at the front of the room, or the fact that the laptop screen was itself a distraction when the teacher wanted pupils' eyes to be fixed on the whiteboard.She went looking for an alternative - and eventually found one. "Using one of these, the teacher can use the laptop and see all the children," she says.

(from this article)

People have often attempted to change behavior by changing affordances, but they rarely admit to it. It's interesting to see how they're consciously trying to create a different behavior in teachers and students, and it'll be interesting to see how long it lasts. Usually changes dictated from above, without a lot of other incentive other than mandate, don't work well.

December 31, 2004

Two views of user research in industrial design

Reading IDSA's "Innovations" magazine this morning, I found an example of two very different ways to view user research in the industrial design process.

In describing the design of the DeWalt 735 planar Bob Welsh and David Wikle talk about how they do user research:

Marketing led the charge by conducting user research throughout the country, digging into what fed portable planer users' likes, dislikes, needs and wants.[...]

This preliminary information was converted into quality parameters, which focused on the team's efforts. These parameters were ranked in order of importance and quantified so that we could directly measure our progress toward each prescribed target. [...] The team proceeded to zero in on the top four opportunities: surface finish, minimum snipe, accuracy and ease of knife change. Curiously enough [because it's a portable planar --mk], portability pulled up last in the rankings.

What's interesting about this to me is the tension between their functionality-driven philosophy, natural in a power tool company, with the recognition that the rationality of function is not necessarily a primary driver. In between discussions of features, thoughts like the one about portability slip through.Portability is not an actual functional factor, merely an aspirational one, in the way people choose planers. Similarly, they acknowledge these kinds of emotional/surface design decisions later when they talk about the placement of the threaded posts at the corners. Yes, they serve a functional purpose, but "the team thought that visually exposing the posts would drive the machine's character as well as garner credit for their function and design." In other words, the posts are there to look like big, badass bolts as much as to elevate the mechanism. Another quote is amusing: "just above the opening to the cutterhead, 'teeth' were added to cognitively warn the user that this is the business end of the machine."

Tej Chauhan of Nokia takes the opposite direction when describing his design for the Nokia 7600, that wacky lozenge phone that came out last year. He begins his description by talking about functionality:

The Nokia 7600 had to look like no other mobile handset. But this wasn't an exercise to design something different just for the sake of being different. The form had to be as purposeful as it was unique.

He then describes Nokia's user research process/philosophy:

Our research extends from people, to trends, ergonomics, technologies and a host of other criteria. Trend research is in itself a vast area. "It's all about making observations, and then defining them and telling them as stories," explains Liisa Puolakka, experience design specialist at Nokia. "And like stories, they should have a frame, characters, context, references to culture and society, and so on. These observations are looking at new emerging interests, lifestyles, desires, dislikes, attitudes, etc. But the most important thing is that the products we then create appeal to people, are relevant to them and their emotions."

Of course the role that the 7600 was supposed to play was to introduce new functionality to the world, since it was Nokia's first 3G phone, so the phone has a camera that can do video and a large color screen. Plus, it's designed to be more of a camera and picture viewer than something to dial numbers, enter text or talk on, as evidenced by the wacky key layout: "Having the keys on both sides of the display put the display and image at the centerpiece of the design. It also encouraged two-handed use, giving the produce and instinctively familiar [in a phone?!? maybe a camera. --mk] and natural-to-hold quality."

Comparing Nokia to DeWalt is interesting: one talks about functionality, the other with emotion, but they're both making consumer products and both dealing very much with both. What's interesting to me is how the corporate culture of the two groups has defined the approach to design and the shape of the end-product. i would say that if they're making mistakes, it's that they're both taking their positions too much to the extreme. DeWalt seems almost embarrassed to talk about the esthetic impact of their design, choosing to couch it in languages of touch "DeWalt design DNA" and Nokia seems to be so obsessed with the emotional impact of their products that they're forgetting that they have to work first. Maybe the two could learn from each other.

December 30, 2004

Arts and Crafts at LACMA

I posted a note to Core77's blog about the Arts and Crafts show at LACMA. Here's the highlight of my post:

The show's strongest point is how it contextualizes the objects and draws interesting connections between product design and social philosophy. Most surprising is how much the European movement linked handicrafts and motifs with "national identity." Traditional elements stand in for "traditional" values and explicitly refer to a mythological agrarian, pre-industrial utopia.

December 01, 2004

Tech companies start to look past tech

change and innovation in technology that people will see affecting their daily lives, he says, will come about slowly, subtlety, and in ways that will no longer be "in your face". It will creep in pervasively.

This is coming from Nick Donofrio of IBM, quoted in this story from the BBC. It's interesting to see a representative of a tech company downplaying the immediate effects of technology. That implies a potentially deep strategic shift, one that requires a different approach to understanding the role technology plays in people's lives. Of course he's using it to push IBM's pervasive computing agenda (whatever that is) and, surprisingly, big iron:

Behind this vision should be a rich robust network capability and "deep computing", says Mr Donofrio.Deep computing is the ability to perform lots of complex calculations on massive amounts of data, and integral to this concept is supercomputing.

IBM clearly hasn't given up all of its assumptions, and I think the supercomputing idea is totally shoehorned into the pervasive idea, but it's interesting to see that they're at least giving lip service to some of these thoughts, if only to further their existing position. GE created GE Capital when they realized that their role in the building process had changed to one of financier and oursourcing consultant. IBM's consulting unit became responsible for a big chunk of the profits because they realized that they weren't just selling computers, but services. These things point to the idea of understanding the system in which products exit. Similarly, this could be the beginning of an attitude shift in tech toward understanding and manipulating the social system in which technology is used.

November 28, 2004

Books I recommended at Design Engaged

At Design Engaged we put up Post-Its of books we liked. I put up the following 3 books and decided to share my decisions with everyone, especially now that Christmas is coming up. ;-)

Human-Built World: How to Think About Technology and Culture by Thomas Hughes. A short and awesomely enlightening history and contextualization of where our contemporary attitudes toward technology come from. (Thanks, Mom!)

Imperial San Francisco: Urban Power, Earthly Ruin by Gray Brechin. The Bay Area was the worldwide center of technology innovation before, during the heyday of mining in the 19th century. There's soon going to be a big biotech campus is on the spot where the worldwide center of iron, steel and machinery innovation was happening around 120 years ago. It's intensely instructive to see how the power (literal, in terms of electricity and figurative in terms of influence) related to what, how and why technological advancement happened, and the consequences (it's not surprising that John Muir and modern ecological consciousness happened in Northern California when you see the context). And it's a great read, especially for those who live in SF and the surrounding area.

The Red Queen : Sex and the Evolution of Human Nature by Matt Ridley. A masterful presentation of sex, evolution and how much like birds and the bees we really are. Those who still maintain the Descartian Duality may be surprised as to how much of our behavior is part of a feedback system that's quite obsessed with sex-based competition. All of Matt Ridley's books rock, but this was the first one I read and it's still a big touchstone when I think about human behavior.

[An update: Andrew posted the whole list on his blog. Excellent!]

November 13, 2004

Talking, walking and chewing gum

I'm at Andrew Otwell's Design Engaged conference right now in Amsterdam with a bunch of really great folks. Yesterday I presented with my latest polemic, Talking, walking and chewing gum: the complexity of life and what it means for design. Here's what I said, roughly.

Intro (5 minutes)

Hi. I'm Mike Kuniavsky. I'm wrote a book on user experience research techniques and I co-founded Adaptive Path, a pretty well-known San Francisco consulting company.

Earlier this year I left Adaptive Path and decided to devote some time to thinking about ubiquitous computing and the social effects of technology. And to write polemics, of which this is one.

My thinking about ubicomp, society, technology, and design in general are not unrelated. The material culture around us does not exist in a vacuum. Products are a reflection of social values, and they affect our values, in turn. Objects endowed with information and communication capabilities are a reflection of a set of specific values. They also change our understanding of what an object is, how we relate to it and, in turn, how we relate to all other objects. How many of you have not wanted to do a "Find File" for your keys across your house? I once dreamt of making a symlink in the LA freeway so that I could get from Pasadena to Santa Monica much faster than traversing all of those freeways.

Parallel to all of this line of thought, I've been consulting with large corporations on user-centered design. I started noticing similarities between the problems that they were having, my analysis of the social effects of technology, and what I saw happening elsewhere. So I started making a list of phenomena that seem somehow related. It's a list of things that represent trends I've seen firsthand, and which have been identified as characteristic of our time.

The list

|

Tired: moving away from |

Wired: moving toward |

| Roles | Skills |

| Fast food | Slow food |

| Linear | Emergent |

| Alexander | Koolhaas |

| Dominant Trends | Multiple simultaneous scenarios |

| Supply-driven | Demand-driven |

| Centralized | Distributed |

| Mass production | Mass customization |

| Marketing | User research |

| Policy | Guidelines |

| Waterfall planning | Agile development |

| Picasso | Duchamp |

| Efficiency | Innovation |

| Long future | Short future |

| Sprockets and pulleys | Black boxes and wires |

| Product lines | Customer lines |

| Price | Value |

| Bureaucracy | Federalism |

| Talyor | Peters |

| Utopian futures | Survivable futures |

| Specs | Personas |

| Hierarchical | Flat and social |

Talking and walking (5 minutes)

I look at this collection and try to identify what binds it together. The pattern that appears is a recognition of the complexity of the world, of the unpredictability of the world, of the incomprehensibility of the world, of the contingency of the world, of the time-based, sporadic, overwhelmingly confusing nature of the world.

What I realized while looking at this list is that we are awakening to the fact that the more we know of the world, the more we know how little it follows simple rules. It's all grey area.

To me, this means that the framework of thought of the last 600 years is coming to an end. Renaissance Humanism was the branding of the West's growing dissatisfaction with Catholicism as the main explanation of the workings of the world. The dominant thought that followed is that by dividing the world into small pieces simple laws can be found to explain it. That idea, that way of understanding, is waning. It's been fading for at least 50 years.

Two things have driven this decline: Communication technology and transportation technology. Cheap telephones, televisions, Internet connections, cars and air travel have made information cheap and plentiful. Talking and walking. And all the cheap and plentiful information has done many things, but what it has really done is to show people how complex their world is.

Chewing gum (5 minutes)

Life is incredibly complex, and now it's not just the scientists at the Santa Fe Institute and Wall Street mathematicians who know it. Many people see it and feel it. But most don't know what to do about it. We can see patterns of compensation mechanisms appear. Nihilism, irony, fundamentalism and nostalgia are all ways to simplify the world. We are at the end of the prescriptive rationalist vision of the world and we're waiting for the next framework to explain the world to appear. It has, but it's going to take a while before it's in full bloom. After all, it was 300 years between Giotto and Isaac Newton.

Which brings me to my main point: It is our job as designers to recognize this set of ideas, to understand it, to create for it, and to name it.

Design is a projection of people's values onto the products of technology. Victorian decoration was a celebration of consumer affluence and machinery over the material world. Bauhaus minimalism was a utopian interpretation of products, buildings and people as engineered components. Designers are interpreters, translators, popularizers, and conduits for ideas. We embrace the leading edge and communicate it to the mass market.

We are past the confusion of Postmodernism, which grasped at relativism and then wore out its welcome. We do not live in a relative age, we live in a complex age, but it hasn't been branded yet. It's time for that to change.

However, there's a tendency to think about designing the little problems, but the big context IS important. We are doing ourselves a disservice in the long run by not designing for these ideas. Now is the time for us to embrace complexity through designing for systems, designing metaphors (as Ben and Adam have talked about) flexible tools (that allow for the kind of gardening like what Ben talks about) and, most importantly, designing incentives for people to consciously think about complexity management.

The complexity of the world is an uncomfortably bright light. Most people have found ways to turn away from it. You should not. You are the people who will make the complexity of the world tolerable. Go to the light of complexity. Run to the light!

[I'd like to thank Ben Cerveny for his insight into this over many meals and car drives; the good ideas here are his]

November 06, 2004

The history of the home theater

A nice, short summary of a longer paper by Jeffrey Tang that traces the history of the home theater in the 70s and 80s.

it was not technological breakthroughs, but rather marketing considerations which led to diverse “product families” centered around three types of audio designs: the cassette recorder, the combination unit (“boom box”), and the personal stereo. Both the producers of audio equipment, and audio equipment users assigned new meanings to these sound machines and to the practice of listening to music.[...]

These fields came together when [Dolby] introduced a home version of its 4-channel cinema playback system, called Dolby Surround. Technically, Dolby’s initial system differed little from quadraphonic sound, a technology that had already failed to woo the music lovers of the 1970s. But where quadraphony had failed as a simple technical upgrade to the home stereo, the new system promised not just improved fidelity but an entirely new kind of experience. Its social meaning was dramatically, and successfully, reconstructed.

Technological innovations enable the social innovations, they do not define the whole of them, even though to the participants at the time it may seem to be only about the boxes. The experience is not just about the boxes, and it's not even about the immediate experience (the interface) of the boxes, the experience is created by the boxes, and part of it (the configuring and tweaking the paper mentions and we've all been through) but it's not limited to them. It's a lesson that product designers should keep in mind, though I think there are few techniques for encapsulating or designing for it.

November 02, 2004

The tightening spiral of cool

A surprisingly good report on mesh trucker hats and the ever-shortening cycles of fashion that we've all experienced, from the decidedly unfashionable USA Today (a year ago!).

The cool continuum — that twisty trajectory that traces pop culture from cultish to trendy to mainstream to so-over-it's-embarrassing to, finally, kitsch — is being compressed.

And then there's this interesting paragraph:

Being cool means being the first to yank something out of context and layer on the contradictions. Having money, for instance, is OK if you cloak it in Salvation Army apparel and a shift waiting tables at the local (non-Starbucks) coffee shop. Desk jobs are verboten. The goal? A career in dilettantism.

In Amsterdam I'm going to be talking about how communication and transportation technologies have revealed the complexity of the world to a record number of people in the last 50 years. The understanding that the cycles of the world are really intricate has shifted how people react to the objects and roles (thus the dilletantism) in their world. The last 10 years of have pushed this new understanding--and people's reactions to it--to a new level, aided by cell phones, the Internet and deregulated airplane travel. I think that the decrease in fashion cycles is related to this, and is itself a product of both that understanding and the technologies that created it, so it's interesting to see mainstream (and how much more mainstream than USA Today?) recognition and analysis of the phenomenon.

October 18, 2004

Electrical engineers acknowledge social science

It's interesting to see how ideas slide around. In this article, in an electrical engineering publication, the thinking goes from "component manufacturers are saying that this ubiquitous computing thing is the next big thing" to "people do funny stuff with their personal technology" to "someone should decide how the electronics should be integrated into people's lives" to

It doesn't take much imagination to see that if you are involved in the development of personal portable electronic products, your engagement with the marketing department is only going to grow as time passes. The subtle nuances of how users actually handle the gadgets in a social context could well set the course of product development.

Which, of course, is what user centered design people have been saying all along, but it's interesting to see how it's being recognized by at least an engineering publication. It'll take it a while, I suspect, to become engineering doctrine, but at least the acknowledgement that ideas come from end users, rather than just going to them, is important.

It's still funny though, that although at least this article recognizes social science, there's still a level of discomfort with the whole idea, as the last line shows:

Your marketing colleagues might come to include as many social scientists as "hard" scientists. I will leave it to you to decide whether that prospect is appealing.

September 12, 2004

The Sony W as "furniture"

The following paragraph caught my eye (really, the eye of Google's news alert) in this story on Apple's new G5 iMac design:

"Sony has another desktop, the W series, whose overall design feels more like the iMac. It feels more like modern furniture design than a consumer electronics product. In fact, we have one in our living room. People are always commenting on what a beautiful design it is. When the keyboard is folded up it doesn't really look like a computer."

It's a minor point, but telling. Some thoughts:

- It doesn't look like like a computer, and that's considered good.

- It does look like modernist furniture, and that's also considered good.

- The problems with the iMac are about tangled cables and instability--things that identify it as machine-like.

What this points to me is a recognition that technology's role is continuing to fade into the background and people are starting to desire technology that doesn't advertise itself as such. Not that that's a big revelation, but it's interesting to see how these ideas are appearing as desires.

September 08, 2004

Circuit Bending in the WSJ

The Wall Street Journal had a piece on circuit bending in yesterday's paper.

It's interesting to me to see this surface, but it only makes sense: technology is getting more pervasive, it's cheaper, so there's less risk in breaking it and the DIY esthetic is being encouraged through all of the TV shows about making and modifying stuff.

I wonder if there's a relationship between cultural penetration of a technology, the power of the technology and affluence to people's interest in modifying it for purely their own pleasure? I mean, it was about 50 years after the introduction of the automobile that hotrodding took off, but only after America was pretty rich and the 454 Chevy big block became commonplace. Now it's about 50 years after the beginning of computers and we're an affluent culture...so what's the current tech equivalent of the 454?

August 22, 2004

Ideachasms

I've been thinking about the adoption of new ideas lately, both in companies and in the market at large. Inspired by the Cambrian Explosion and subsequent die-off, I've been thinking about what happens to people's perceptions when new ideas come along.

Thought 1: Idea definitions narrow with time

When a substantial new idea appears, the boundaries of the definition of that idea are not known. There are many different interpretations of the idea. However, as time goes on, the number of new definitions slows, the idea space is explored, fewer variations appear and, finally, the number of things that define a concept are reduced. This looks like this:

Thought 2: A comprehension threshold

There is a point at which the number of variations on an idea becomes too great to communicate a coherent version of that idea to an uninitiated audience. In other words, if there are too many alternative interpretations of an idea to choose from, if someone hasn’t been primed by experience with similar ideas, or by in-depth research to understand the commonality between all of the variations on an idea, they won't get it. Or if they get it, they'll either get a rudimentary version of it or a distorted, incorrect, one.

NOTE: I'm intentionally simplifying this. In reality, I think that exposure to ideas makes them more comprehensible, so the right edge of the threshold is likely higher than the left, but for the sake of this discussion, I've made it a simple horizontal line.

Thought 3: Comprehension thresholds create Moore's chasm

Geoffrey Moore defined a chasm in new technology adoption in his book "Crossing the Chasm." In it, he divides the adoption market for a given technology into 5 divisions:

A: Technology enthusiasts

B: Visionaries

C: Pragmatists

D: Conservatives

E: Skeptics

Note: I've cut off the two most trailing ends of the bell curve—theoretically it goes all the way to 0 on both ends.

The graph represents market size for sections of a market, but it can also be read left to right as the sequence in which a technology is adopted, which is how Moore's chasm appears (roughly speaking, of course—in reality, the gaps represents the difficulty of reaching a market, not time, but it's a reasonable abstract model). His insight is that, for some reason, it's hard to get Pragmatists to adopt a new idea and it may take a lot of energy, time or money to cross it. Moore's theory is that Pragmatists want something that "just works," unlike Technology Enthusiasts, who like the tech for the sake of the tech, or Visionaries, who are enamored with what the tech could be.

But what does "just works" mean? Moore talks about the qualities Pragmatists are looking for in a product—that it be stable, reliable, supported, etc—but I have a different theory. I think that they buy the product when they can be bothered to understand the idea it represents (and thus, the idea's value), and they only understand the idea when the number of competing variations is small enough to not be confusing. In other words, until the number of competing variations on an idea drops below the comprehension threshold, Pragmatists won't care to understand it.

This means that the chasm is that period between the time when an idea crosses the confusion threshold and the time that the number of variations drops to the point that people care to grok what it means (and it's not that they can't understand it, it's just that there are too many options for them to filter through).

Thought 4: Idea variations are embodied in products

For people to be exposed to an idea, there has to be some way it's introduced to them. With product-based ideas, that way is, tautologically, through exposure to products. The graph of idea variations can be read as the number of products on the market that represent a certain idea, with each product a variation. It can also be read as the number of features offered on all the products currently on the market. Initially, when there's a new product idea, it's followed by an explosion of variants, but at some point the complexity of the product variants starts to drop. My claim is that it's only after this has dropped for a while, and the variants have been winnowed to those that are actually successful, that the mainstream market will adopt the idea.Thought 5: User research rapidly reduces competing ideas

So far this is an evolutionary theory of idea success in the marketplace (and a simple one at that). To a large extent, I believe that's going to happen no matter what, but I also believe that user research can accelerate the evolution substantially, and to raise the chances of an idea's success in the mainstream market. If the variations on an idea are researched in the Early Adopter stage, it may be possible to identify ones that particularly confuse Pragmatists (the noise in the signal, from their perspective) and eliminate those from products targeted to them. To some extent, I think that this is what we user researchers have been doing all along, but as an explanation for why it's useful, and when it's useful, this set of ideas seems pretty satisfying (at the moment, anyway ;-).August 09, 2004

Stain repellant fabrics as social litmus test

I can't tell if the following quotation about why Thomasville is using a new generation of stain-repellent fabrics is deep insight into the social effects of tragedy on consumption, a myopic oversimplification, crass sensationalism, or all three:

"After the events of 9-11, we decided collectively as a culture that it's our friends and our families that matter, not our stuff," says Sharon Bosworth, Thomasville's vice president of upholstery design."The best thing is to get people to come to our house. You can't have that kind of life unless you take the velvet ropes off. Children and pets can go anywhere. People are invited into every nook and cranny. Nobody is going to say, `I can't stand her because she spilled red wine on my sofa.' We count our riches these days in friendship."

From Superfabrics: Imagine a plush sofa that can repel mustard.

August 03, 2004

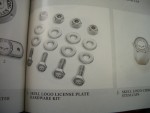

The Harley Catalog

One of my favorite brand experiences arrived in the mail today, the Harley Davidson 2005 catalog. I don't own a Harley (I ride an old Kawasaki) and I don't particularly like the bikes...but, oh, the catalog. It's the size of a phone book, with full-color pictures on every page and it takes the "brand promise" of technology fetishization to an amazing extreme. Starting from the beginning, where the catalog suggests that

Before customizing your VRSC model, you should get to know it from front to back. Identifying where parts are located and the impact they have on your bike is a crucial part of the process.

it's all about the minutia of decorating, personalizing, worshipping your motorcycle. To help you maximize the impact, they custom make variations of every single part on the bike. The catalog realizes that people don't buy Harleys to ride Harleys, they buy them to customize them, to project themselves, not to use them. Riding is secondary, and--in fact--riding the bikes is pretty de-emphasized. All the parts down to the washers, on the other hand, are lovingly photographed on a white background, with descriptions worthy of Martha Stewart:

The Lower Triple Tree Cover dresses up the unfinished underside of the triple tree for a complete custom look. The chrome-plated steel cover installs easily, concealing the brake splitter fitting.

It's a complete sensual immersion in chrome and steel, with barely any reference to anything outside of the world of the bike, and speaks lust on about five levels, and there are two words that appear at least a dozen times on every page: Harley-Davidson. Yet, somehow, it's not annoying Harley while at the same time being overwhelmingly Harley. A pretty amazing document from a company that knows where their value lies. Hell, after reading it, I want to chrome something on my bike, even though I haven't washed it in 6 years.

August 02, 2004

Icons for Everybody!

Incomprehensible icons are nothing new. Regularly, when a new technology that has a display and multiple functions appears, designers flock to iconify all of the functions, since icons save screen real estate and translation costs. In general, I think icons seem like a bad idea in all but a few cases, but it comes up again and again. With cars growing more UI functions as the various subsystems merge their display, car UI designers have (re)discovered icons. And, not surprisingly, they're experiencing the same problems that icon designers before them have had:

But for drivers and passengers, the symbols can sometimes be indecipherable without a stroll through the owner's manual.

And, of course, the traditional "they'll learn what they mean" specious argument is brought up:

Automakers, who started using icons at least a decade ago, say consumers accustomed to seeing icons on personal computers and cell phones are comfortable with them in their cars.

And this quote belies a deep chicken-egg misunderstanding of the nature of symbolic meaning:

"It's all about recognition," says Gary Braddock, design manager of the product design studio for Ford Motor. "If you can create a symbol like the Nike swoop that everyone recognizes, you can add a function to the symbol."

The symbol is only meaningfully associated with an idea after the idea has been firmly established. Nike made good shoes for a long time before their symbol came to be associated with their brand values. That's why when they expanded to China, their shoes had a giant Nike swoosh, whereas their American shoes have a tiny, every-shrinking, one. It's because the symbol means less in China, so its association with the shoe needs to be emphasized more.

What's interesting, as the article points out, is that cars have had icons for quite a while: dashboard lights and control indicators. There were already problems with these (I'm still confused by various heating/cooling icons: am I warming my feet and head or my chest and defrosting the window?), but they were relatively stable (there's even, apparently an ISO standard). The proliferation of new functions is going to push the limit of people's recall ability when new icons are introduced, when even the supposedly time- and lab-tested existing icons have problems(PDF file). What's going to happen when a bunch of new icons are created?

This is tough stuff, as these groups are finding out, and contentious precisely because it's tough:

In the USA, the National Highway Traffic Safety Administration considered adopting all of the standards, making their use mandatory. But the agency backed down after "several groups said they don't want to go to all symbols, because symbols are not intuitive and some people would not know what they mean," says Stephen Kratzke, associate administrator for rulemaking at NHTSA.

Frankly, I think that this is a holdover philosophy from an era where manufacturing restricted the number of variations on a button or dashboard, restrictions where largely no longer exist.

But that's not my point. I'm thinking that this is a good example of the real world problems that ubiquitous computing is going to encounter when the, ahem, rubber meets the road and specialized devices have to communicate their functionality intelligibly. Just wait, if appliance icons are bad now, wait until this level of integration and confusion hits.

Btw, I found that article on the Intelligent Transportation Society of America's site. Maybe the ITS people have something to teach the smart everyday object people....

July 26, 2004

Good Names/Bad Names

This is a basic branding exercise, but I've been surprised as to how often bad names are given to perfectly good technologies, as if it doesn't matter what it's called. I think it matters a lot. Technologies are products, but they have many o the same competitive hurdles. So I decided to brainstorm a list of comparable technologies with good and bad names and see which one had faster adoption (as a technology).

| Good Name | Bad Name |

| Firewire | USB 2.0 |

| Extreme Programming | Scrum |

| Zigbee | Z-Wave |

| Ambient Intelligence | Ubiquitous Computing |

| Blog | Wiki |

| WiFi | 802.11a |

| Linux | BSD |

The ones with the catchier names (which means: easily pronounceable, easily memorable, evocative) seem more popular. There may be some entanglement there, maybe better technologies come from more clued people, who are likely to understand the value of naming. In those cases the technologies may win both in terms of the tech and the name, but that doesn't happen often enough that I'm not sure it's a rule. There may be all kinds of other factors. 802.11a probably failed because 802.11b was selling to the markets that 802.11a was targeted toward, and as WiFi (the name and the technology) took off, the network effect (so to speak) was so huge as to dwarf any other factors, but there was crucial moment (sometime in 2001 or 2002) where the name probably had an effect.

I'm interested in other examples, especially ones where the technologies may have had comparable marketing support. And I'm trying to avoid comparing identical technologies (Firewire is IEEE1394 and iLINK, so comparing those is just comparing branding). Suggestions welcome.

July 22, 2004

When black boxes break

I experienced a black box failure today. The IC Igniter on my 1985 Kawaski GPz 750 is going flaky, and there's nothing my mechanic can do about it. My motorcycle has been misbehaving for a while, generating enough buildup on the #1 sparkplug (it's a 4 cylinder engine) that a new plug gets as much carbon buildup in 5 minutes as it would in 5000 miles on a normally-working cylinder. This causes it to backfire and, eventually, makes the plug--and the cylinder--go dead, so I ride around on a bike that's weak, shakes and sounds like a truck.

At first my mechanic thought it was a problem with the plugs, so he had me put in new plugs. Then he thought maybe it was dirt in the #1 carb (the bike also has four of these, one per cylinder), so I first flushed it out with a solvent. Then we thought it was a short, but there seemed to be more than enough current going to the plug.

It turns out that it's the only piece of computer equipment on the bike. My bike is from the very first generation of engines that had any kind of computer equipment in them. In the early 80s, car and motorcycle companies realized that they could get much better control over spark timing by using electronics, rather than the old mechanical distributor. So the distributor went away and was replaced by the unsexily-named "ignition module," a cluster of relatively simple electronics that monitors some sensors in the engine and processes that information to adjust the spark timing. The distributor was soon followed into extinction by the carburetor (whose job it is to mix air and fuel), which was replaced by electronic fuel injection.

The benefits of this way of doing things made cars in the last 20 years much more efficient, reliable, predictable and durable. All great stuff, except when something goes wrong. Now, when there's a problem, there's no way fudge, tweak or adjust around it. Digital technology is binary: it works, or it doesn't (this isn't quite true: you can hack any digital technology if you know what you're doing, but you have to know a whole host of new information to hack the electronics embedded in solid blocks of epoxy, which is how ignition modules are made).

One of the complaints that people have when they criticize the inclusion of information processing into everyday technology is this opacity of operation. Well, I experienced this first hand today, and, as someone who evangelizes for the introduction of information processing into everyday objects, I had to deal with an everyday object whose information processing was faulty. I tried to evaluate the effects the opacity had on my life: would it have been better if the bike, otherwise completely mechanical, did not have this electronic part to fail? My opinion: the module is worth it. My bike ran for almost 20 years with no ignition problems. Having all 4 carburetors adjusted cost me $400 earlier this year, and that's something that has to be done every 5 years. A new ignition module will cost me under $100 and the installation process will consist of popping my bike seat off, unplugging the old black box and plugging in the new one. I say it's a win for the black box.

July 20, 2004

WiFi becoming a utility

Last year, I posted a rambling analysis of why McDonald's WiFi plan didn't seem to make any sense (and, really, neither did my analysis, but my heart was in the right place ;-). Then I posted a follow-up where I mentioned the competitive landscape of San Francisco's WiFi cafe scene.

It seems that WiFi is becoming an infrastructural service, in that it's expected to be free, or not be available at all, like bathrooms. Jupiter just put out a report saying as much. I don't have access to the report, but here is their teaser for it, which is pretty much all I need to know:

In 2003, six percent of online consumers used public hotspots, and only one percent paid to use them. Not surprising, high-profile players in this space dropped out of the game in early 2004.Key Questions

To what extent has adoption of public hotspots increased since 2003?

To what extent are consumers willing to pay for public wireless high-speed Internet access?

What should service providers do to drive paid adoption?

This may be the fastest move that a technology has ever made from being cutting-edge to being a utility (well, OK, it's overstating that it's a utility, but it's quickly approaching that). The one place it seems to work is Starbuck's/T-Mobile, and then only for busy travelers or people who can get it paid for by their company (often the same group): I wonder if that model could work for...bathrooms? Private bathrooms all over the world, accessible to anyone with a monthly subscription. ;-)

Small tech bad for big furniture

In this USA Today story furniture makers are said to be scrambling to adapt their technology furniture to modern computer technology. They just figured out how to make decent computer desks, and look what happens: everyone moves to laptops and flatscreens.

I predict that this is only going to increase as the monolithic computers that we're using right now fragment into task-specific computer-based tools for living. It's not surprising that "writing desks return" (as per a subhead in the story): writing is what people care about. They care about the task, not the tool. The furniture provides a context in which to do the task and needs to accommodate the tool (whether it's a tower-case PC or an inkwell), but it's purpose is to support the task.

Another interesting point is that furniture styles change more slowly than technology. That's absolutely true. In fact, other than the bleeding edge, furniture styles that people actually buy are pretty much frozen in the 1950s: Country, French Provincial, Colonial, Eames Modernist (and probably a couple others) represent 90% of the market. (maybe I'm wrong about this, maybe there's a secret cachet of "70s Swinger" style that's still getting a lot of play, but I don't think so) This is actually a good thing, since the adoption of smart furniture can leverage the expectations and modes of a host of existing furniture tropes (modalities, use cases, whatever you want to call the cluster of expectations people have for specific furniture pieces). The TV was a piece of furniture before it was an appliance (the Philco Predicta notwithstanding). Now it's pretty much unthinkable as a piece of furniture because culture has accepted it in its more natural state, but that intermediate stage was important for acceptance.

July 13, 2004

allmusic.com's bad redesign

The AllMusic relaunch is the worst I've seen in maybe five years. The amount of visual clutter seems to increase with every page, there's nonstandard DHTML that only works in IE, s a largely-useless Flash navigation widget, an enormous banner ad floating in whitespace, information that used to be in one place has split up into multiple screens, etc. I could go on, but it's sufficient to say any general-public site redesign that requires a manual is a failure on a number of levels. And that's not even addressing the terrible performance problems, both on the browser end and--judging from the frequent unavailability of the site--from the server end.

But this is not the fault of producers who crossed the "should-can line" when specifying fancy gadgets (a term Molly uses when talking about fashion: just because you can doesn't mean you should), or designers who designed interfaces that could only work with IE, or engineers who didn't load test the new design. No. They were never given guidance that would have allowed them to prioritize those things appropriately. The fault lies squarely with management.

A site rollout this bad and a design this convoluted points to a fundamentally damaged management structure. Only bad management, myopic, arrogant or dangerously negligent management can produce something this bad. The site owners took a site with great contents and a loyal user base and, with little regard of those people actually valued, decided to throw it away.

Here's my prediction of how the management decisions embodied in this design will affect the company in the next 24 months:

The management is probably too proud to admit that all of the effort of this relaunch is a failure. They won't throw it out, reinstall the old site and go back to the drawing board to examine the problems that led to this redesign. Instead, I predict that they'll try to push through and "fix" the site, which is of course not what's at fault, really. It's a symptom of a broken process. Someone's head will roll--either the head designer, engineer or product director, whoever "owns" the product. More pressure will be put on the development team, who are probably already demoralized and exhausted from the death march of having to launch the thing in the first place. Any system this broken will certainly have been a death march--the announcements over the last couple of weeks that it was launching were probably as much public threats to force the developers to launch it as they were to inform the audience (how did they expect the audience to prepare for a new design? Such announcements do not build buzz, they're symptoms of corporate fear). Within six months the good people will leave, since they don't need the stress brought on by management panic and pressure (and besides, they can put it on their resume now). New hires will have to learn the system and deal with the residual staff, who are likely to be the least qualified people on the old team, but who now have seniority and will have been promoted into the positions of the good people who left.

Ad revenue for this quarter, maybe the next two quarters, will go up as the sales team can use the new design to extract more money from their connections. The management may even convince themselves that this means it's a success, if a painful one. It's not. Within a year, the novelty of the new design will have worn off for ad buyers, who will be buying less. Sales will put pressure on product managers to create new features that will attract users.

All the while users will be abandoning it. If not actively spreading the word about how bad the site is, they'll not be telling others how good it will be, thus diminishing the quality of whatever brand value AllMusic may have had (the other thing the new site was probably set up to do). At some point, probably two years from now, the company will have laid off everyone, and kept a skeleton crew of editors and writers as freelancers to preserve the illusion of fresh content. This will further dilute the value of the site, and eventually even the tiny cost of maintaining this group of underpaid creatives will no longer be justifiable. They'll then shut it down and sell the assets (i.e. the database of reviews) to someone at a rock bottom price, who will put up the static content with Google contextual ads pointing to Amazon and Ebay. They'll extract the last little bit of value from it, like glue from a racehorse.

I certainly hope I'm wrong--I clearly like AllMusic enough to write all this--but I've seen it happen often enough. So what to do? Here's my first order recommendation to the management of AllMusic: roll back the current design, admit you have a big organization problem that allowed it to happen, try to understand what that problem was, figure out what problems the redesign was trying to address--no the REAL problems, those that you're thinking now are your guesses at solutions to deeper problems, go one level, two levels back, THOSE problems. Meanwhile, try to understand why people come to your site, why advertisers give you money, and start making small changes, one at a time, one per month and watch the effect they have on how people use your site. In two years, you'll probably be making a lot more money than even if all of the gimmicks on the current site worked, which they don't.

July 12, 2004

Agile Ecosystems

I've spent the last couple of weeks preparing for the workshop William Pietri and I are going to be teaching in August. I'm really impressed with how you can take all of the references to writing code out of, for example, Agile Software Development Ecosystems and it still makes sense as a philosophy for how groups of people can collaboratively solve problems and make things. It may be the first fundamental rethinking of how things are made since Taylorism. That's not to imply that XP and the other agile methodologies are equivalent to Taylorism, but I think that they, as a philosophy of how to get groups of people to make stuff, are as profound a concept.

I'll write more about this as I finish reading and thinking about it, but I'm quite taken with the ideas at the moment.

July 01, 2004

Colin Martindale

When I wrote my rambling review/essay of John Heskett's Toothpicks & Logos I linked to Colin Martindale's The Clockwork Muse: The Predictability of Artistic Change. I hadn't bothered to read the official reviews Amazon printed there, because they deeply don't get it. I find it to be a problematic work, but a really interesting one which attempts to find a predictable pattern in creativity and probably does in several places. It came out before all of the emergent theory literature (like Six Degrees, which I also reference in that piece), so Martindale didn't have the tools to try and take his analysis further and critics had no basis from which to evaluate the book, since it was--and still is--quite in left field.

I have also found a much better review of the book by Denis Dutton. In this review he summarizes the point of the book quite well:

Perhaps it’s just that I’ve become so habituated to the literary journals, but not only did I fail to find The Clockwork Muse boring, it was for me full of all sorts of revelations. Martindale writes with a calculated, in-your-face insolence, heaping contempt on critics, humanists, behaviorists, Marxists, philosophers, sociologists. He credits Harold Bloom for having half understood, in his bumbling English professor’s manner, the law of novelty, but doesn’t have much nice to say about many others except psychologists in his own field. He uses his various theses to analyze the histories of British, French and American poetry, American fiction and popular music lyrics, European and American painting, Gothic architecture, Greek vases, Egyptian tomb painting, precolumbian sculpture, Japanese prints, New England gravestones, and various composers and musical works.A major lynchpin of the investigation concerns what he calls “primordial content,” roughly the emotional or emotionally expressive aspects of a work. Martindale argues that the arousal potential of works tends to require more primordial content as time go on in a particular art or style. Thus the natural progression will always be from classic to romantic, for greater musical forces, for more violent metaphors, larger, more extraordinary paintings, and so forth. The (Dionysian) primordial is contrasted with (Apollonian) conceptual, which involves, if I understand him, the stylistic mode of an art. Within an established style, primordial content in time must increase. When a style changes, primordial content will decrease. Thus art evolves.

In other words, novelty in form means that content can be less complex, but as we get tired of the form, content becomes more complex (or, in Martindale's unfortunate terminology, "primordial"). Think of electronic music: at first, it was all techno and disco, 133 bpm four-on-the-floor. It was a big hit. Now, 20 years later, there's a forest of subgenres. Why was the original formula not enough to sustain 20 years of dancing? People feel compelled to create ever more complex content when a new field of ideas opens. Is the pattern of that creation somehow predictable?

Dutton raises some very good questions about the book, but concludes--as I have--that it's far from useless because it asks many old questions in an entirely new way, a way that produces unexpected answers that have a face validity that's hard to ignore. That said, the book has been on the remainder shelves pretty much since the day it was published, so it in fact has been ignored and Martindale's assertions have never been validated or refuted on anything like the terms he created them under. Too bad, and I hope that now that the emergent property analysis tools exist, someone in need of journal publication will use them to analyze Martidale's assertions.

June 08, 2004

Usability News on 2ad

2ad, the Second International Appliance Design Conference was one of the best conferences I've been to. Small (150 people, though it seemed even smaller than that), focused (one track, carefully sequenced), brave (they scheduled a robot soccer tournament in the middle of the thing!) and smart. I was really flattered when they accepted my proposal to do a side show for their Bazaar, similar to UPA's Idea Market (which, incidentally, I--uh--was supposed to talk at later this week, but unfortunately can't be at--it looks great, though). I really like the Idea Trade Show concept behind this, and it seemed to work particularly well at 2ad. In fact, Ann Light, of Usability News, has written an excellent roundup of how it went.

I don't know if it's just my brain pattern matching, but it seemed that key ideas of two of my favorite gatherings--Burning Man and the TED conferences--were incorporated in, of all things, a corporate research lab context. Burning Man is the mother of all performance bazaars and TED is the best idea theater in the world, and 2ad managed to capture some of the magic of both. Props to the organizers. I can't wait to go next year.

June 07, 2004

My phone=me

There are few things that are not part of our bodies that are as universally present with us as our phones. As Bluetooth beacons appear in our phones, this means that our phones are broadcasting identifiers of us. Ignoring the potential privacy problems with this (yes, ladies and gentlemen, if every database in the world was linked, bad people could find out information about us that we don't want them to know), the idea of having a broadcast identifier of us is really powerful.

In one version of their "Familiar Stranger" project Elizabeth Goodman and Eric Paulos use Bluetooth phone beacons to track the people who we have been in the same space with before, in order to identify the landscape of near-miss acquaintances in our lives.

In their "Personalized Shared Ubiquitous Devices" article in the May/June 2004 Interactions magazine David Hilbert and Jonathan Trevor of FXPAL discuss the idea of a "personal information cloud" which, like the "recent documents" menu, contains a list of recently used documents, but it's a list that's independent of an application or device:

For instance, editing a Word document on my desktop, or a PowerPoint presentation on my laptop, would populate my cloud with these documents. Furthermore, our clouds would become populated through the use of any computing device, including multi-function copiers [they are, after all, working for a copier-maker ;-) --mk]. So if I copied or scanned a document, it would appear in my cloud.

The way they have people access their information cloud is through the use of RFID cards and/or software running on PDAs.

Now, combine the idea of the Bluetooth identity beacon and the document cloud into a single office use scenario (for example) to create a smart hoteling cubicle (or work surface, for the cube-averse):

The cubicle recognizes who is in it who is in it by the Bluetooth beacon on their phone (rather than an RFID card or specialized software). The phone doesn't need to share any "real" information to the cubicle at all, just its unique id. As you walk into the cubicle, it brings up your personal photo archive on the digital picture frame, asks the PBX to forwards your extension to the local phone and brings up your desktop on the shared computer.

This is the trivial case. Now, imagine, two people in the cube together. I'm sitting at my desk and you walk in to chat. You're likely coming over to talk to me about something you're working on (let's assume). Since you're carrying your phone, the cube knows you're in the same space as me, so it gives my desktop computer instant access to your information cloud:

The next step from here is to keep a log of who was in your cube and when, mapped that information to a calendar, maybe along with the files you worked on together, and their most current versions.

Now let's move to a group work area--maybe a bullpen, lounge or conference room. Either a central computer (like described in the Interactions article) or a Bluetooth-enabled laptop can figure out who's in the general vicinity and create a folder called "People who are near me" that lists all of the information clouds of the people in my immediate vicinity, since we're likely going to need each others' files while working together. That way, not everyone need bring their laptops in order to be able to collaborate on a set of documents together.

Treating a Bluetooth-enabled phone as an identity marker, "my phone = me", we can start to ask a whole new set of questions about how identifying ourselves to our surroundings can affect the way we use those surroundings. Moreover, this is a technology that augments existing technologies and ways of work, rather than requiring new ways of working and new technologies to understand, and it does it passively. Even if the implications of losing your cell phone now become that much more dire, I think that's hot.

June 04, 2004

Toothpicks, logos and definition of design

I just finished reading John Heskett's Toothpicks & Logos: Design in Everyday Life. It's an interesting counterpart to Bill Stumpf's The Ice Palace That Melted Away: How Good Design Enhances Our Lives and Virginia Posterel's The Substance of Style (which I discuss here). All three books create a case for the importance of design.

Stumpf, one of the designers of the Aeron, writes an intimate personal description--almost an autobiography--of how design has shaped his world and why he thinks it's a crucial part of civil life, though his reasons are primarily personal. In that respect, it's an insider's book for insiders. Postrel, an outsider, states that design is important because people find it important and that it should not be ignored for that reason. Hers is an outsider's book for outsiders. Heskett takes the middle track between the two. He's an insider to the field, but his book is for people outside it. It's positioned as an explanation of why design makes a difference not in terms of how it fits into a vision of proper living, but how its effects are felt throughout society.

Starting with an acknowledgment that a definition is hard to pin down and nebulous:

As a word it is common enough, but it is full of incongruities, has innumerable manifestation, and lacks boundaries that give clarity and definition. As a practice, design generates vast quantities of material, much of it ephemeral, only a small proportion of which has enduring quality.

He then goes on to present a history of design, and attempts to define the practice by examining the typical places where design is found: objects, communication, environments and identities. He then uses each of these headings to examine more subtle aspects of design. So, for example, when talking about objects, he describes the different kinds of industrial design practices (individual versus group, internal versus external, etc.).

An interesting point he makes early on is that design is more of a work practice than a set of defined tools:

Most practical disciplines, such as architecture and engineering, have a body of basic knowledge and theory about what the practice is and does that can serve as a platform, a starting point, for any student or interested layman. The absence of a similar basis in design is one of the greatest problems it faces.

This really reminds me of the talk Bonnie John gave at IBM's NPUC conference last year. In the talk she discussed that HCI--which is clearly becoming a design discipline, or the two are merging--is pretty low on the Capability Maturity Model scale:

| Level | Focus | Key Process Areas |

|---|---|---|

|

5 Optimizing |

Continuous process improvement |

. Acquisition Innovation Management . Continuous Process Improvement |

|

4 Quantitative |

Quantitative management |

. Quantitative Acquisition Management . Quantitative Process Management |

|

3 Defined |

Process standardization |

. Training Program . Acquisition Risk Management . Contract Performance Management . Project Performance Management . User Requirements . Process Definition and Maintenance |

|

2 Repeatable |

Basic project management |

. Transition to Support . Evaluation . Contract Tracking and Oversight . Project Management . Requirements Development and Mgt . Solicitation . Software Acquisition Planning |

|

1 Initial |

Competent people and heroics | |

(table borrowed from here)

John's comment was that HCI needed to be more like other engineering disciplines, that there's a lack of rigor, consistency and standardization in the field. This also seems to be bothering Heskett, at least in terms of how it makes it harder to legitimize the field. Frankly, I think that the field isn't ready for it. Before design can become engineered, the basic building blocks need to stabilize. With much of the engineered world, the one that John and Heskett want design to be like, history allowed a relatively limited number materials (wood, stone, brick, iron) to dominate for long enough that the basic properties could be explored and processes defined in fairly predictable ways. If you consider modern materials (for my purposes here, consider information to be a material), their properties are barely explored from an engineering standpoint compared to the old ones. At the Metropolis design conference in New York there was a lecturer talking about carbon fiber. Carbon fiber seems to be an old "new material" but the lecturer was complaining that designers still don't know how to use it correctly.

So maybe design isn't a discipline at all, and all of the attempts to pin it down are doomed to fail. Maybe design is a stage in the evolution of our understanding of a material and how to manipulate it on the way to engineering from art and pure science?

OK, that's pretty far out, I admit, but I think there's some truth to it, since at least one aspect of design--the use of materials--is clearly on a continuum between pure science and engineering (again, I'm treating information--and humans reactions to it--as a kind of material, though I realize it's not exactly like, say, paint).

However, as we know, design isn't just a functional discipline. It's an amalgam of function and form. Don Norman recently discovered this for himself and wrote a good book about it, though it's been there all along. The problem this amalgamated state introduces is that people, unlike materials, don't behave in linear ways. People are driven by power laws and habituation (at some point I'll write about these two books, which although quite different almost certainly describe related phenomena that are at the core of human behavior). In other words, we have a really hard time comprehending more than a handful of things, and we get bored, which is not how materials behave. So when Heskett criticizes Starck's ridiculous juicer, it rings strangely angry:

To have this item of fashionable taste adorn a kitchen, however, costs some twenty times that of a simple and infinitely more efficient squeezer--in fact, the term 'squeezer' should perhaps be more appropriately applied to profit leverage, rather than functionality for users.

OK, whatever. Clearly people are buying it for some reason that's unrelated to how well it makes juice that they could be buying in a carton, anyway. As Postrel argues, the success of design is ultimately dependent on human perceptions and its definition needs to have that at its core.

That said, much of Heskett's book is a very good description of how design actually happens, and he's not afraid to editorialize about problems he sees, such as this comment on the cult of design stars:

The emphasis on individuality is therefore problematic--rather than actually designing, many successful designer 'personalities' function more as creative managers.

He finishes the book with two chapters, one entitled "Systems" and one "Contexts," in which he tries to pull back and explain what it all means. "Systems" describes how designs don't, and shouldn't, function in a vacuum, but it ends with a curious note of resignation for design's ability to change the world:

If we can understand the nature of systems in terms of how changes in one part have consequences throughout the whole, and how that whole can effect [sic] other overlapping systems, there is the possibility at least of reducing some of the more obvious harmful effects. Design could be part of the solution, if appropriate strategies and methodologies were mandated by clients, publics, and governments to address the problems in a fundamental manner. Sadly, one must doubt the ability of economic systems, based on a conviction that the common good is defined by an amalgam of decisions based on individual self-interest, to address these implications of the human capacity to transform our environment. Design, in this sense, is part of the problem.

I disagree. Design is neutral, it can neither harm nor help by itself. It is the grease that makes tools work better and to be more satisfying. But both artificial hearts and guns have lubricants, and designing a better lubricant is neither better nor worse for the world. To think that the practice should have a morality is asking too much of it. In the end, we can only hope that all of the design of the world and all of the organizations supporting it (as he describes so well in the "Contexts" chapter) will make more of people's products and lives better than it will make them worse.

March 28, 2004

The meaning of 'robot'

In the March 11 "Technology Quarterly" the Economist had a story on domestic robotics (The gentle rise of the machines) concluded with a summation that captures my thoughts on the subject pretty well: